- Ameya360 Component Supply Platform >

- Trade news >

- Cadence: Last Holdout for Vision + AI ProgrammabilityCadence: Last Holdout for Vision + AI Programmability

Cadence: Last Holdout for Vision + AI ProgrammabilityCadence: Last Holdout for Vision + AI Programmability

Cadence Design Systems, Inc. might have found the secret recipe for success in an increasingly hot AI processing-core market by promoting a suite of DSP cores that accelerate both embedded vision and artificial intelligence.

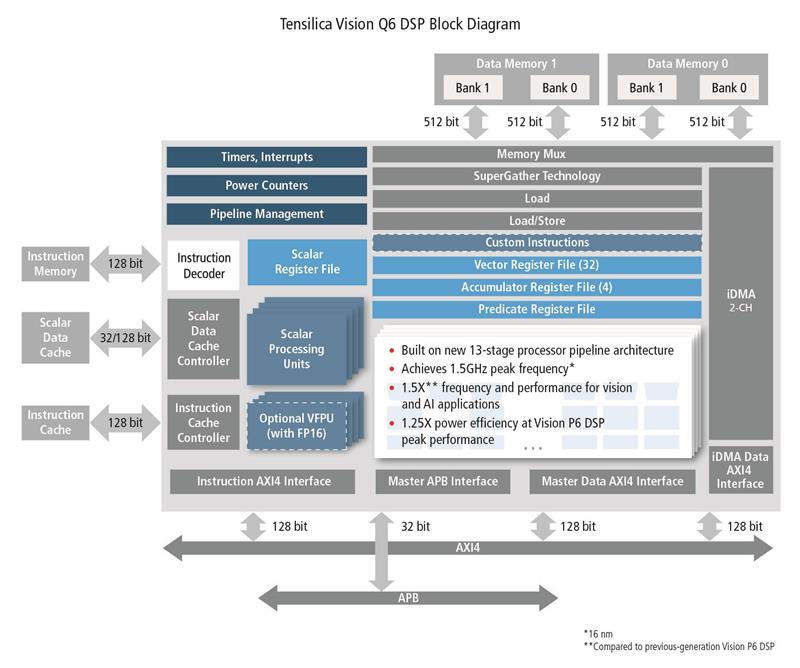

The San Jose-based company is rolling out on Wednesday (April 11) the Cadence Tensilica Vision Q6 DSP. Built on a new architecture, the Vision Q6 offers faster embedded vision and AI processing than its predecessor, Vision P6 DSP, while occupying the same floorplan area as that of P6.

The Vision Q6 DSP is expected to go into SoCs that will drive such edge devices as smartphones, surveillance cameras, vehicles, AR/CR, drones, and robots.

The new Vision Q6 DSP is built on Cadence’s success with Vision P6 DSP. High-profile mobile application processors such as HiSilicon’s Kirin 970 and MediaTek’s P60 both use the Vision P6 DSP core.

Among automotive SoCs, Dream Chip Technologies is using four Vision P6 DSPs. Geo Semiconductor’s GW5400 camera video processor has adopted Vision P5 DSP.

Mike Demler, senior analyst, The Linley Group, told EE Times that where Vision Q6 DSP differs from its competitors is “its multi-purpose programmability.” Among all computer-vision/neural-network accelerators on the market today, Demler noted, “Cadence is the last holdout for a completely programmable multipurpose architecture. They go for flexibility over raw performance.”

Demler added that Vision Q6 DSP is “comparable to the earlier Ceva XM4 and XM6, also DSP-based. But those cores add a dedicated multiplier-accumulator (MAC) array to accelerate convolution neural networks (CNNs).” He observed that Synopsys started with a CPU-MAC combination in its EV cores, but moved on to a CPU-DSP-MAC accelerator combo in the EV6x. Ceva went to a more special-purpose accelerator architecture in NeuPro, which looks more like Google’s TPU. Demler said, “Ceva’s NeuPro has much higher neural-network performance, but so do most of the other IP competitors. It’s a growing list now with Nvidia’s open-source NVDLA, Imagination, Verisilicon, Videantis, and others.”

Vision + AI strategy

Thus far, Cadence is sticking to its original strategy of vision + AI on a single DSP core.

Demler believes that “SoC providers are seeing an increased demand for vision and AI processing to enable innovative user experiences like real-time effects at video-capture frame rates.”

Indeed, Lazaar Louis, senior director of product management and marketing for Tensilica IP at Cadence, explained that more embedded vision applications have begun leveraging AI algorithms. Meanwhile, some AI functions improve when better vision processing comes first, he added.

AI-based face detection is an example. By capturing a face in varying multiple resolutions first, AI can detect it better. Meanwhile, to offer a vision feature like “bokeh” with a single camera, AI first performs segmentation, followed by blurring and de-blurring in the vision operation. Both applications demand the mix of vision and AI operations, and Cadence’s DSPs can put both operations in the camera pipeline, explained Louis.

More significantly, though, Cadence is hoping to use its well-proven vision DSP as a “Trojan horse” to open the door to design wins in present and future SoCs expected to handle more AI processing, acknowledged Louis.

On one hand, Cadence has both Vision P6 DSP and Vision Q6 DSP, designed to enable general-purpose embedded vision and more vision-related on-device AI applications. On the other, Cadence has a standalone AI DSP core, the Vision C5, which offers more “broad-stroke AI,” according to Louis, for always-on neural network applications.

While the Vision P6 and the Vision Q6 are used for applications requiring AI performance ranging from 200 to 400 GMAC/sec, the Vision Q6 DSP can be paired with the Vision C5 DSP for applications requiring greater than 384-GMAC/sec AI performance, according to Cadence.

Q6 advantages

The new Q6 comes with a deeper, 13-stage processor pipeline and system architecture designed for use with large local memories. It enables the Vision Q6 DSP to achieve 1.5-GHz peak frequency and 1-GHz typical frequency at 16 nm in the same floorplan area as the Vision P6 DSP, according to Cadence. As a result, designers using the Vision Q6 DSP can develop high-performance products that meet increasing vision and AI demands and power-efficiency needs.

But what sorts of applications are driving the vision and AI operations to run faster?

In mobile applications, Louis said that users want to apply “beautification” features not just to still photos, but also to video. “That demands higher speed,” he said. In AR/VR headsets, simultaneous localization and mapping (SLAM) and imaging processing demand decreased latency. System designers also want to use AI-based eye-tracking so that an AR/VR headset can render on its screen one object in focus, the rest blurry, rather than a screen with various focal points, which could create visual conflicts. Such applications also need much higher processing speed, added Louis.

More surveillance cameras are now designed to do “AI processing on device,” according to Louis, rather than sending captured images to the cloud. When such a camera sees a person at the door, the device there decides who it is, detects any anomaly, and puts out an alert. Again, this demands more powerful vision and AI processing, concluded Louis.

Deeper pipeline, new ISA, software frameworks

The Vision Q6’s deeper pipeline comes with a better branch prediction mechanism, overcoming branch overhead, according to Cadence. The Q6 also comes in a new instruction set architecture. It provides additional enhanced imaging, computer vision, and AI performance. For example, there are up to 2x performance improvements for imaging kernels on Vision Q6 DSP, claimed Louis.

It’s important to note that the Q6 is backward-compatible with Vision PG DSP. It provides separation of scale and vector execution, which results in higher-scale performance, according to Cadence.

Beyond the hardware enhancements, Louis stressed Cadence’s commitment to support a variety of deep-learning frameworks. The Vision Q6, for example, comes with Android Neural Network support, enabling on-device AI for Android platforms.

Louis also pointed out that the Q6 DSP now offers custom layer support. When customers devise unique innovations to augment standard networks, “we can support them,” he said. The Vision Q6 DSP extends broad support for various types of neural networks for Classification (i.e., MobileNet, Inception, ResNet, VGG), Segmentation (i.e., SegNet, FCN) and Object Detection (i.e., YOLO, RCNN, SSD).

Boasting the company’s full ecosystem of software frameworks and compilers for all vision programming styles, Louis noted that the Q6 supports AI applications developed in the Caffe, TensorFlow, and TensorFlowLite frameworks through the Tensilica Xtensa Neural Network Compiler (XNNC). The XNNC maps neural networks into executable and highly optimized high-performance code for the Vision Q6 DSP, leveraging a comprehensive set of optimized neural network library functions.

The Vision Q6 DSP is now available to all customers. Select customers are integrating the new DSP core in their products, according to Cadence.

Online messageinquiry

Cadence unveils Tensilica Vision Q6 DSP IP

Cadence, Imec Disclose 3-nm Effort

- Week of hot material

- Material in short supply seckilling

| model | brand | Quote |

|---|---|---|

| TL431ACLPR | Texas Instruments | |

| RB751G-40T2R | ROHM Semiconductor | |

| MC33074DR2G | onsemi | |

| CDZVT2R20B | ROHM Semiconductor | |

| BD71847AMWV-E2 | ROHM Semiconductor |

| model | brand | To snap up |

|---|---|---|

| BP3621 | ROHM Semiconductor | |

| ESR03EZPJ151 | ROHM Semiconductor | |

| BU33JA2MNVX-CTL | ROHM Semiconductor | |

| STM32F429IGT6 | STMicroelectronics | |

| TPS63050YFFR | Texas Instruments | |

| IPZ40N04S5L4R8ATMA1 | Infineon Technologies |

- Week of ranking

- Month ranking

Qr code of ameya360 official account

Identify TWO-DIMENSIONAL code, you can pay attention to

Please enter the verification code in the image below: